Preventing Google from Indexing a Paragraph, Web Page, and PDF

In the vast realm of the internet, visibility on Google is paramount for many website owners. However, there are instances when you may want to hide specific content from Google’s search results. This can be for various reasons such as protecting sensitive information, avoiding duplicate content issues, or simply maintaining a clean and focused search presence. In this blog post, we’ll guide you through the process of no-indexing a paragraph, a webpage, and a PDF on Google.

No-Indexing a Paragraph:

1. No-Indexing a Paragraph:

Sometimes, you might have a webpage with a paragraph or section of content that you want to keep hidden from Google. Here’s how you can achieve that:

- HTML Meta Tag: Open the HTML source code of your webpage and add the following meta tag within the <head> section:

- html

- Copy code

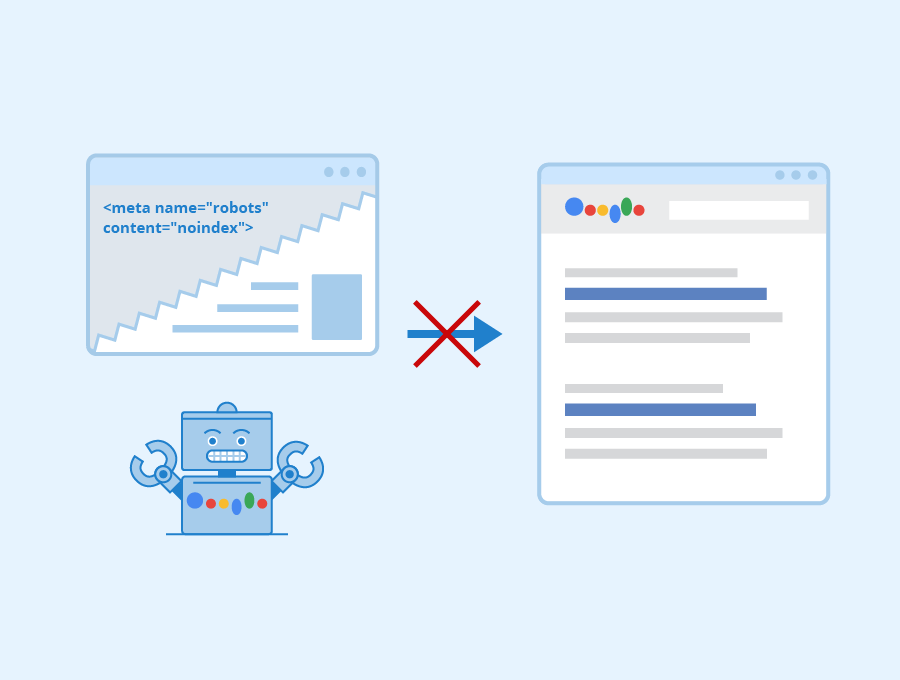

<meta name=”robots” content=”noindex”>

- Place this meta tag just before the <title> tag to ensure that Google doesn’t index the entire page or the specific paragraph.

- Remember to save and upload the modified HTML file to your web server.

2. No-Indexing a Web Page:

If you want to prevent Google from indexing an entire webpage, follow these steps:

- Robots.txt: Create or edit the “robots.txt” file in the root directory of your website. Add the following line:

- javascript

- Copy code

User-agent: *

Disallow: /your-page-url/

- Replace /your-page-url/ with the actual URL of the page you want to exclude from Google’s index.

- Save the robots.txt file, and Google’s crawlers will respect this directive.

3. No-Indexing a PDF:

Sometimes, you may have PDF files on your website that you don’t want to appear in Google’s search results. Here’s how to do it:

- Meta Tag in PDF: Open the PDF file using a text editor like Notepad. Add the following meta tag at the beginning of the document:

- html

- Copy code

<meta name=”robots” content=”noindex”>

- Save the PDF file with the changes and upload it to your website.

- Additionally, you can use the robots.txt method mentioned earlier to disallow Google from crawling and indexing PDFs in a specific directory.

Remember that changes to Google’s index may take some time to propagate, so be patient. Also, keep in mind that while these methods can prevent your content from appearing in Google’s search results, they won’t necessarily keep it entirely private or secure.

However, it’s essential to understand that relying solely on a robots.txt file isn’t a foolproof method to ensure a web page remains unindexed. If a web page is accessible to web crawlers, there’s a chance it might still appear in search results. Imagine a scenario where an external website links to your page (only if the link is do-follow; no-follow links are exempt). In such cases, when a search engine crawler visits that external site, it may end up crawling and indexing your page.

So, it’s crucial to keep in mind that this method isn’t an absolute guarantee of preventing a web page from being indexed.

Using the X-Robots-Tag HTTP Header

An alternative method to prevent a web page from being indexed is by utilizing the X-Robots-Tag. To employ this tag effectively, you’ll need to make changes to your site’s web server configuration.

Here’s an example of how this tag works:

plaintext

Copy code

X-Robots-Tag: noindex

Including this tag informs web crawlers not to index the specific web page. This approach tends to be more reliable than the robots.txt file because it directly communicates with search engines to ensure the page remains unindexed.

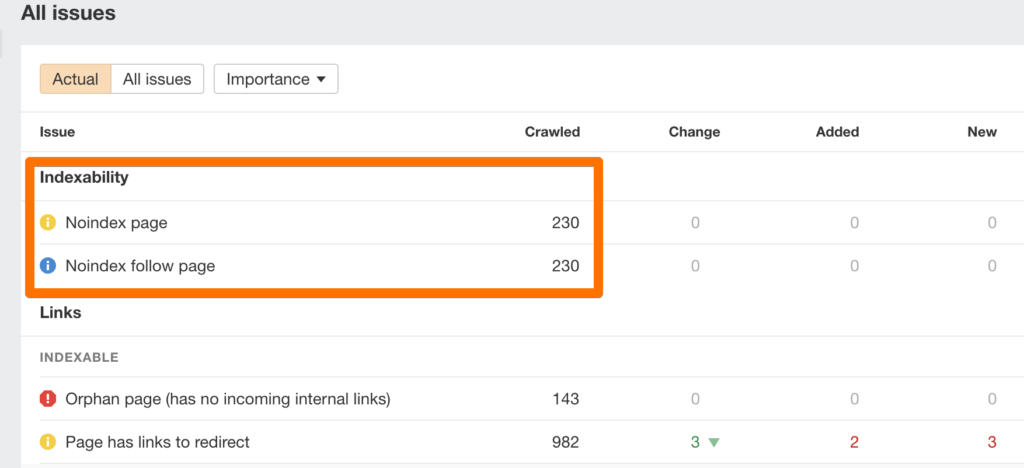

Noindexing a Web Page That’s Already Appearing in Search Results

If a page is already indexed and showing up in search results, you can use the noindex tag while ensuring that the page remains crawlable by search bots. This action will signal to search engines that the specific page should no longer be indexed.

How to Noindex a Paragraph?

Currently, there’s no straightforward method to selectively noindex a paragraph or specific sections of a web page. Google’s John Mueller has stated that while you can use the “googleon/googleoff” tags, they aren’t a guaranteed way to ensure that a specific part of a web page won’t appear in search results. These tags mainly apply to Google Search Appliance and not necessarily to Google.com.

How to Noindex a PDF?

To prevent a PDF file from being indexed by search engines, you can use the following methods:

X-Robots-Tag: Similar to blocking a web page from search results, you can use the X-Robots-Tag to noindex a PDF. Add the X-Robots-Tag to the HTTP header response when serving the PDF file with the following header:

plaintext

Copy code

X-Robots-Tag: noindex

This header explicitly instructs search engine crawlers not to index the PDF file. Ensure that your web server or content management system is configured to include this header when serving the PDF file.

Robots.txt File: Another approach is to use the robots.txt file to disallow search engine crawlers from accessing the PDF file. Add the following directive to your robots.txt file:

plaintext

Copy code

Disallow: /path/to/file.pdf

Replace “/path/to/file.pdf” with the actual URL or path of the PDF file on your website. By disallowing the PDF file in the robots.txt file, you indicate to search engine crawlers not to index it.

Note: If a PDF is already indexed in search results, ensure you add the X-Robots-Tag and confirm that it’s not blocked by the robots.txt file. It should also remain crawlable by search bots to receive instructions not to index the specific PDF.

Alternatives to the Noindex Tag

Here are a few alternatives you can use to exclude content from search results:

Canonical Tag: Canonical tags direct search engines to display the preferred version of a page or content. For example, if you have ten web pages with duplicate content, you can use a canonical tag on the non-preferred pages to indicate that they should be treated as duplicates, with only the preferred page/s being indexed and ranked.

301 Redirects: A 301 redirect is a permanent redirection from one URL to another. It’s useful when a page has been permanently moved to a new URL, and you want to redirect visitors from the old URL to the new one. While it doesn’t directly prevent indexing, it ensures that the old URL is redirected to the new one, and search engines transfer indexing and ranking signals accordingly.

That covers the basics of how to noindex a page, PDF, and paragraph. As you embark on this process, always remember your specific objectives and the type of content you’re working with.